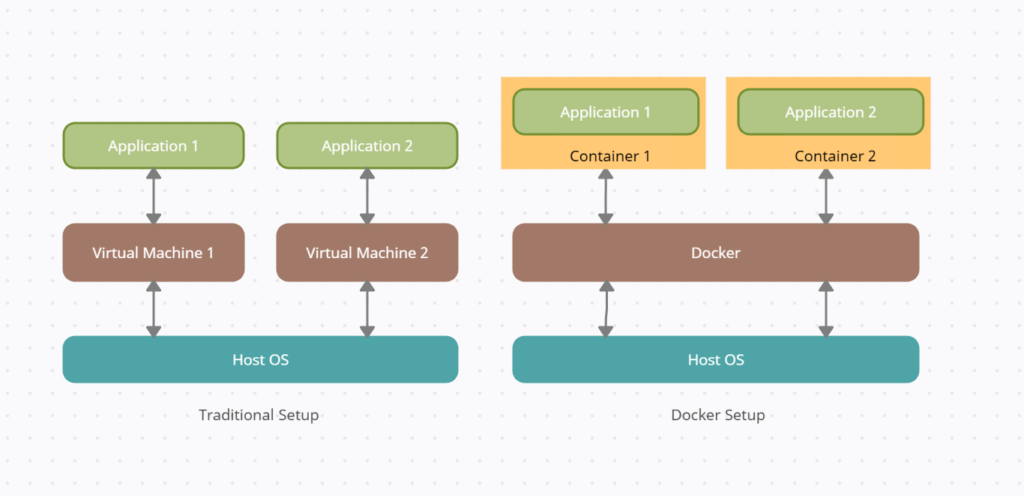

By now everyone is familiar with the idea of containers and docker and how those words are used interchangeably like search and google. This article is more like a self reference to some of the basic ideas and commands around docker for reference.

Docker or containers in general came to solve the typical software problem, “It works in my system and not in Prod”. If you look at a typical dot net application in our laptop, it has many dependencies around which framework sdk or runtime is installed and your project might have some other dependencies, need to install Enterprise Library 5.0 or something like Spreadsheet gear , in the dot net framework days. The point is you have these dependencies which need to be installed in your environment and chances are, when you deploy to the dev server, you might forget to install one of these dependencies and sometimes (most of the time) the errors you get will be misleading. After hours of debugging you realize, forgot to install that special one.

Docker helps to solve that problem. All the dependencies are listed in a docker file and once configured, the app runs in a sandbox environment and it makes no difference whether its your laptop or the production server. Except for the crashes I get for the limited memory on my laptop. 😉 But the importance of this for CI/CD deployments like in Azure DevOps cant be stressed enough. It gives you the confidence that, if its working in my system, it will work on the server.

Docker helps you instantiate lightweight containers from docker images, which can run in any system where you have Docker Desktop installed. With a typical dot net application, it starts this multistage process, where it builds layer after layer with all the dependencies mentioned in the docker file. Once this file is composed and you create a docker image, you can replicate this process across all your environments with the same docker file. This is what brings the consistency needed across environments.

Docker images for ASP.NET Core | Microsoft Docs

If you been a Microsoft developer from the old days, one of the first things you would appreciate is the evolution of Microsoft docs. Everything is explained so neatly, these days I rarely have to look elsewhere. Looks like they have an equally paid team to develop the docs. 🙂

When you select Docker while creating a new project, that Docker file will have the necessary info to create a docker image, where it downloads the necessary files from the Microsoft container registry. If you work in a enterprise environment, you will notice that, you would have to change these settings, since they would have a container registry of their own with a pre-approved list of images.

Standard docker file from microsoft

# https://hub.docker.com/_/microsoft- dotnet FROM mcr.microsoft.com/dotnet/sdk:5.0 AS build WORKDIR /source # copy csproj and restore as distinct layers COPY *.sln . COPY aspnetapp/*.csproj ./aspnetapp/ RUN dotnet restore # copy everything else and build app COPY aspnetapp/. ./aspnetapp/ WORKDIR /source/aspnetapp RUN dotnet publish -c release -o /app --no-restore # final stage/image FROM mcr.microsoft.com/dotnet/aspnet:5.0 WORKDIR /app COPY --from=build /app ./ ENTRYPOINT ["dotnet", "aspnetapp.dll"]

One thing they dont talk in that page is to use dockerignore. We dont want bin and obj folders in our container image. So just create a .dockerignore file in the same level as docker file and add these 2 lines or more if have other folders to ignore

bin\ obj\

Lets now come to some common commands that we use in docker and assume my docker image is called cheers.

docker build -t bobbythetechie/cheers

In the terminal, make sure you are browsed to the location of the docker file. The above like will start to build the image and will tag with the name given. Its a normal practice to use your docker hub id and the follow up with the name you want to give for the image. This helps it make unique and if you ever want to publish it to the docker hub, it helps.

docker build -t bobbythetechie/cheers:v1.0.0 docker build -t bobbythetechie/cheers:1.0.0 docker build -t bobbythetechie/cheers

To version, just use the build command with colon as shown above. If you dont provide it, docker will version it as “latest”.

docker run -p 8080:80 bobbythetechie/cheers

Finally run the docker image. Now the reason I put in that -p tag is, thats one of the things I always forget. Understand that when we build docker images and especially when we use Docker compose, we can have multiple projects , like lets say we want to run 3 web apis at the same time along with a messaging service like Kafka and all the dependencies that comes with it. To avoid all these services clashing with same port numbers, we can specifically tell which port number each service should use right in the docker image.

# final stage/image FROM mcr.microsoft.com/dotnet/aspnet:5.0 WORKDIR /app EXPOSE 80 COPY --from=build /app ./ ENTRYPOINT ["dotnet", "aspnetapp.dll"]# final stage/image

You can see that I modified the standard Microsoft docker file to say, when you build that image, make sure its using port 80. Now this is the port inside the docker container. When we actually run this image, we want to say expose it to another port number, which we can use to access the app. So in the below example, port 80 internally maps to 8080 externally. So we can access this app only through 8080.

docker run -p 8080:80 bobbythetechie/cheers

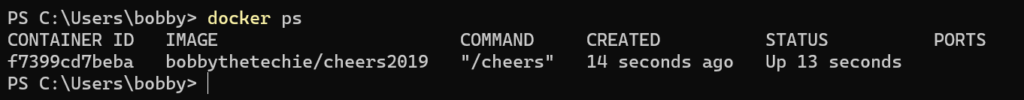

We might want to see the list of containers that are running in the system and thats when we use command ps.

docker ps

Something similar to the above image. Its useful when we have lots of containers and Visual Studio can be misleading at times.

Next is pushing this image to dockerhub, so that you can use it anywhere. (Needs internet 🙂 )

docker push bobbythetechie/cheers

It might ask for your docker hub credentials and after that, the image is pushed to docker hub. In enterprise environments, its going to be a company repository, but the idea remains the same.

Now that its in the docker hub, all you need to do is go to the new environment and run the same command, but this time the image will be downloaded from the hub into the new system and it will run the same. Since the docker hub id is unique, docker knows the image it needs to download.

docker run -p 8080:80 bobbythetechie/cheers

I guess that would be it. Some of the basics around docker for a dot net developer. There a few more changes and configurations when working in an enterprise environment, but the basic idea remains the same. And even though we play around in the beginning to get it right when there are multiple systems involved, once we have it configured, you can be rest assured it will work every time , everywhere.